On Thursday, Twitter announced it will roll out two features it’s been testing with verified users over the past couple of years: the ability to limit the notifications you receive so that they only come from people you follow, and the option to switch on a somewhat mysterious “Quality Filter.” As the company’s announcement post described it, that tool uses “a variety of signals, such as account origin and behavior” that screen “lower-quality content, like duplicate [tweets] or content that appears to be automated.”

It’s good to see that the company — whose own CEO admits sucks at dealing with trolls and whose former employees have described as “a honeypot for assholes” — is trying to hide the swarm. And, according to a few verified members who have been using the features for a while, they’re generally helpful, if not incredibly late.

But Randi Lee Harper, an anti-harassment activist who recently gained access to the Quality Filter tool, brings up an interesting point about it: “Quality Filter is for a very specific kind of abuse.” She would know. Harper has been tweaking her own program to filter threatening tweets for about two years, assigning a set of rules similar to an anti-spam filter that looks for patterns in data. Her system considered factors like account creation dates, egg profile pics, a user’s interactions, and so on. But even after creating that extensive list, she wasn’t able to identify abuse that was not hyper-specific to the Twitter community.

Ultimately, she concluded that even if Twitter’s Quality Filter was effective, she was still wary to use something that completely blocked out the noise. “If you’re under fire, you need to take the time to review those tweets to see if any require action, such as involving the police,” she wrote.

The difference between Harper’s filter and Twitter’s is a certain willing blindness. Just because Twitter has made it so you can’t see a person’s disgusting threats, doesn’t mean you haven’t been threatened. Shouldn’t we at least have the option to review everything, even if Twitter’s algorithm thinks it’s a form of harassment? If how Twitter has handled these issues in the past is any indication, they need all the feedback they can get.

As we’ve seen in instances, slapping a one-size-fits-all Band-Aid onto a social network that’s valued for its generally democratic atmosphere introduces a whole new set of issues, kind of like that Simpsons episode where Springfield plans to stop a lizard infestation by introducing “wave after wave of” lizard-eating Chinese needle snakes, and then snake-eating gorillas. Everyone has a harrowing tale about the time they discovered Facebook’s “Other” inbox. And we recently saw how Instagram was able to arbitrarily protect one very famous celebrity’s account under the guise of cleaning up abusive comments.

This is all to say that internet filters are not simple. Protecting users while also giving them access is a difficult order to fill, and probably an impossible one. Right now, we’re witnessing social networks struggle with the issue, but let me take you back to another era, before egg-sent tweets and Instagram comments — even before Facebook messaging. Back to the regular ol’ internet, when digital web-wide babysitters existed. Let me tell you about Net Nanny, a software that allows parents to block their children from whatever evil they fear the internet holds.

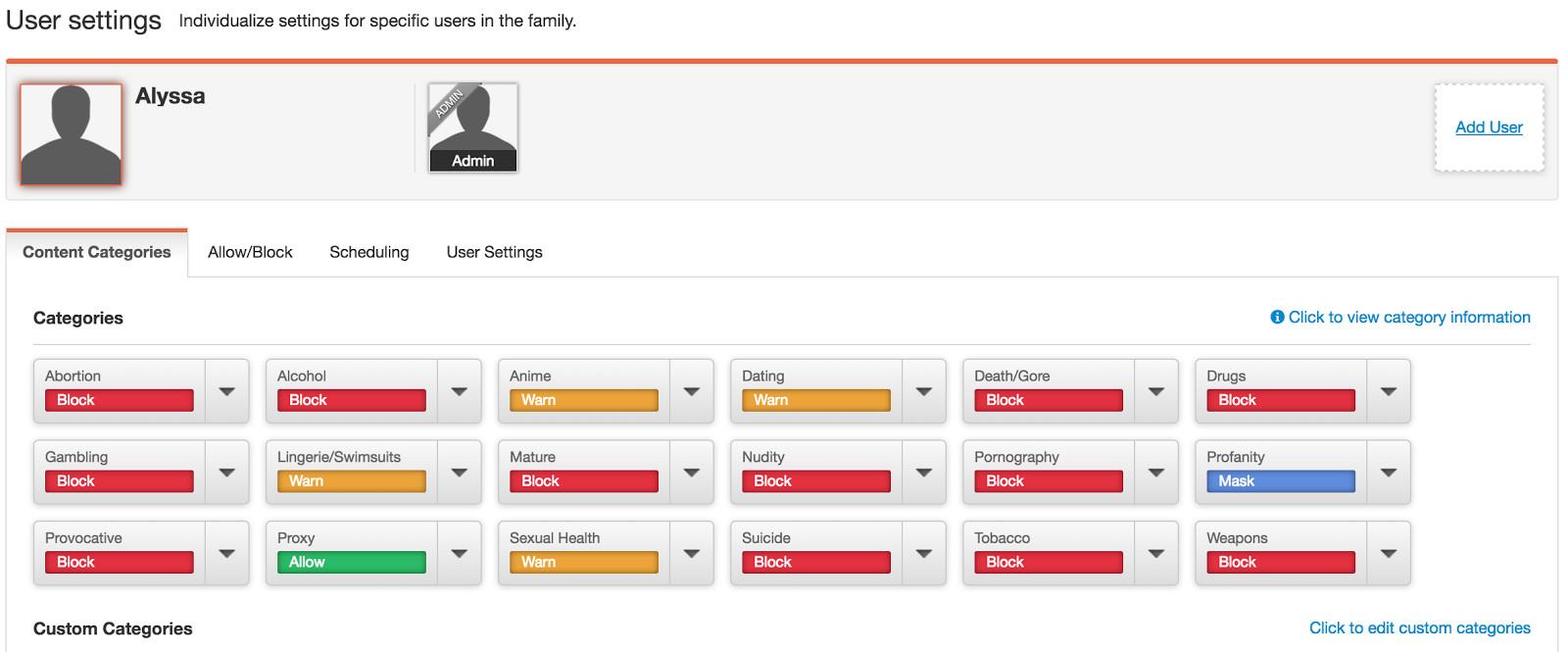

Created and released by Gordon Ross in 1995, Net Nanny was the internet’s original quality filter. Similar to Twitter’s new tool, the service’s origins are also connected to an ugly alley of the internet: a sting operation in which Ross observed a pedophile soliciting a child in a chat room, which led him to realize that “society would require an internet protecting service in the new digital age.” For $39.99 a year, the program gave parents free reign to regulate whatever their children encounter while surfing online — whether that be profanity, discussions about abortion, anime, nudity, or simply “provocative” content.

Net Nanny is not the only program of its kind (I see you, Christian Broadband), but it is an example of how internet filtering began, and how flawed it was (and is). In the world of Net Nanny, visiting the Victoria’s Secret website is deemed “provocative,” while a Google image search of “best lingerie” is permitted. Looking up another word for “abusive” on Thesaurus.com, as I am ashamed to admit I did for this article, is for some inexplicable reason considered “mature.” Gawker and BuzzFeed were out of the question, yet somehow a Redbook post on how to give a good blow job is OK.

Considering that Net Nanny has been featured in many a tech advice column, and is now overseen by company called ContentWatch, you’d think it would have addressed some of these oversights. But this is the downfall of any filter, whether it be for a social network or your entire web browser: The internet embraces and rejects new slang, celebrities, websites, and social networks at a breakneck pace. What once was offensive, now is not, and on and on.

That’s not to say that Twitter’s latest effort is as ham-handed as a browser-regulating program. But it’s proof that unless the company is able to adapt its filter the speed at which its own community adopts certain language and practices, it could very well become just as irrelevant.

Also, it’s worth mentioning that Net Nanny has something Twitter doesn’t: the ability to know what content is being blocked, and the personal choice to control that. I never thought I’d say this, but maybe Twitter could learn something from the Olds.