In April, a man was shot while broadcasting himself on Facebook Live. The front-facing camera kept recording as he lay on the ground. The previous month, viewers watching a Periscope livestream suspected they were witnesses to a rape. More recently, Ohio prosecutors accused a teenager charged with kidnapping and rape of broadcasting her friend’s sexual assault live, also on Periscope. “She got caught up in the likes,” a prosecutor told The New York Times.

Given the changing environment, it’s entirely possible that at some point we could witness a murder on Facebook Live.

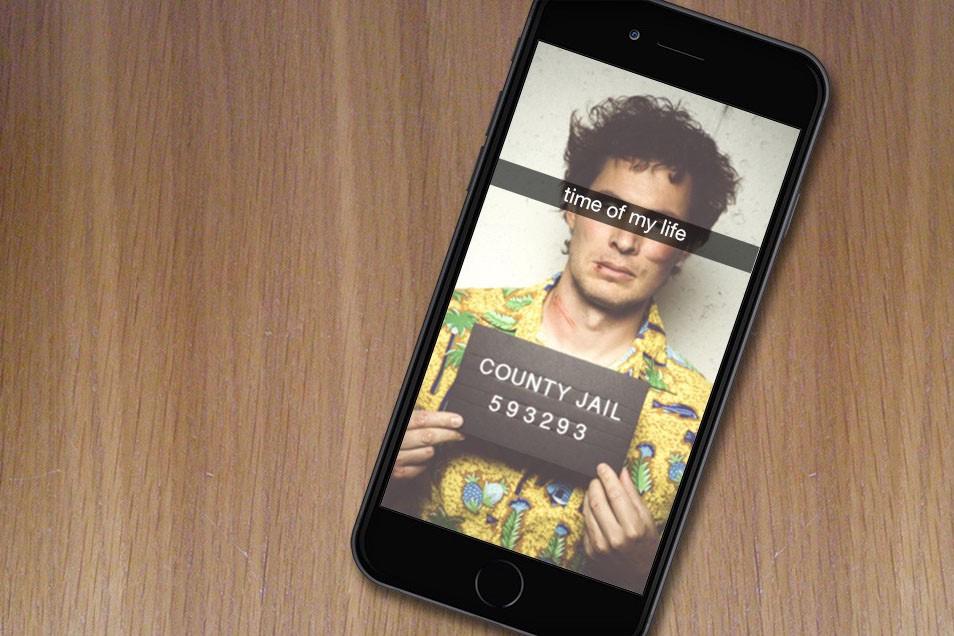

Lawbreaking boneheads (and serious criminals as well) love streaming video. Two men were arrested after bragging about plans for an armed showdown on Periscope; a woman was arrested after broadcasting herself drunk driving. And a pack of Australian teens (the most terrifying strain of Bad Teen!) asked Facebook Live viewers to egg them on as they trashed a convenience store and shouted racist insults.

So how are platforms responding? Facebook is experimenting with monitoring certain Live feeds once they start “trending.” So the next time a Live video goes BuzzFeed-watermelon-smash viral, it’s likely there will be a Facebook employee on call to shut it down if someone whips out a gun or genitalia.

But that’s just for ultra-popular streams. Most Facebook Live video monitoring still relies on user flagging. Facebook even encourages users to directly contact law enforcement if they see a crime on Live.

Most companies offering streaming live video appear to lean on users to let them know if their streaming tools are broadcasting rape and murder, not to mention lesser crimes. At any rate, they’re not forthcoming about their efforts to stop horrible shit from appearing as raw footage in our feeds — Twitter and Periscope didn’t respond to my questions about how Periscope handles criminal footage. Gamer-centric streaming platform Twitch, the latest company with potential abuse caught on its livestreams, says it will contact law enforcement “if a credible threat of imminent physical harm or actual harm to others is made on our platform and timely reported to Twitch.” But Twitch wouldn’t tell me how it discovers “imminent physical harm.” Does it rely on users flagging video? Or does it have a team or algorithm tasked with monitoring raw footage for criminal behavior? Twitch wouldn’t say, but I’m guessing it’s left to the users.

Even if these companies developed some scary-accurate Minority Report–style crime-prediction technology or deployed thousands of monitors to trawl for crime, that doesn’t mean they could stop it. Placing the responsibility on users is troubling — and they might not be able to keep doing it. A recent lawsuit alleges that Snapchat’s speed filter is partially to blame for a user’s high-speed car crash … and you can bet if that sort of legal action is successful, livestreaming platforms will take note.

This piece originally appeared in the May 6, 2016, edition of the Ringer newsletter.